'2 sekunden daten' is an installation about what we call artificial intelligence, but it is not about machines reasoning. It is about them absorbing and encoding the breadth of human culture, with all its bias, but also its beauty.

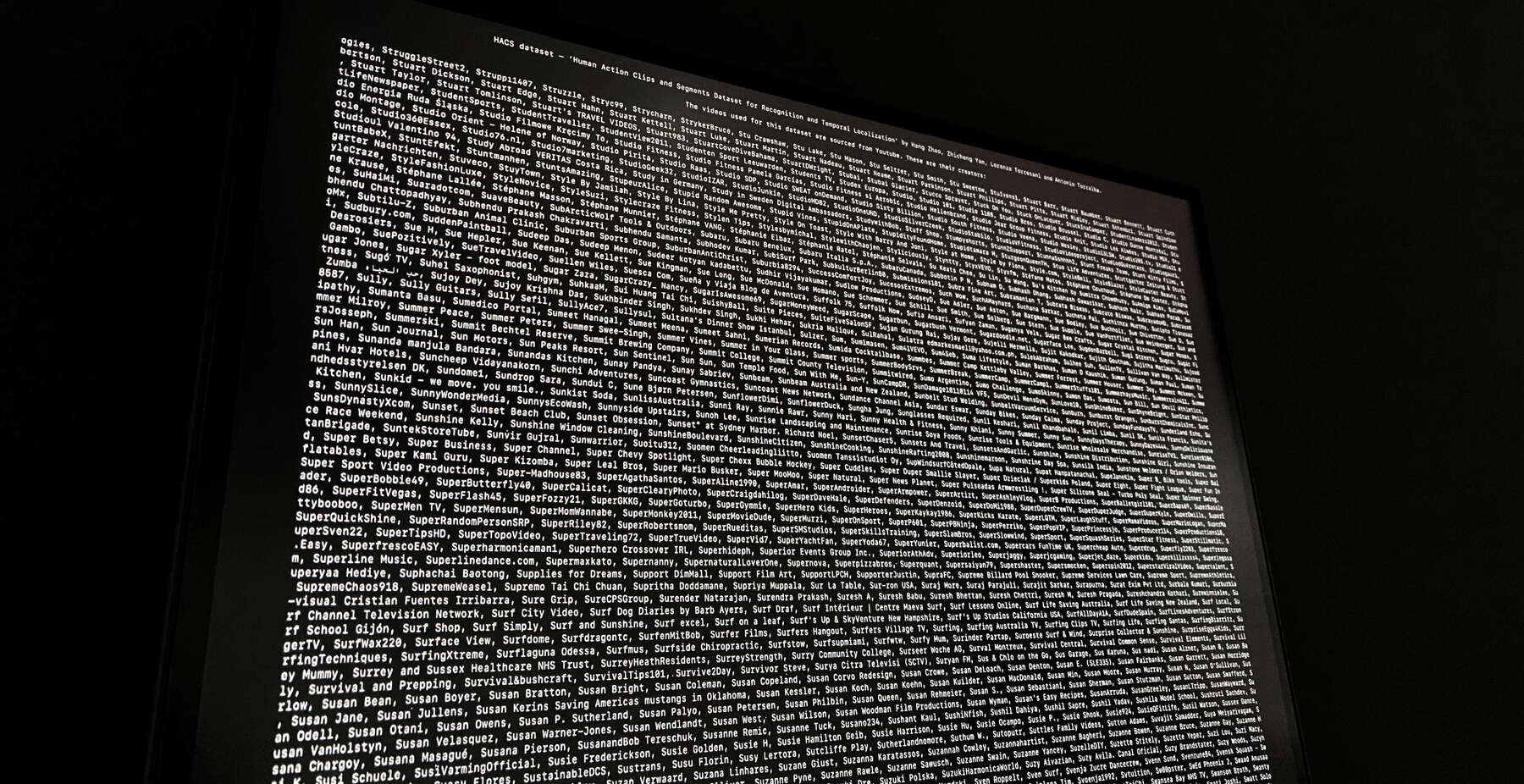

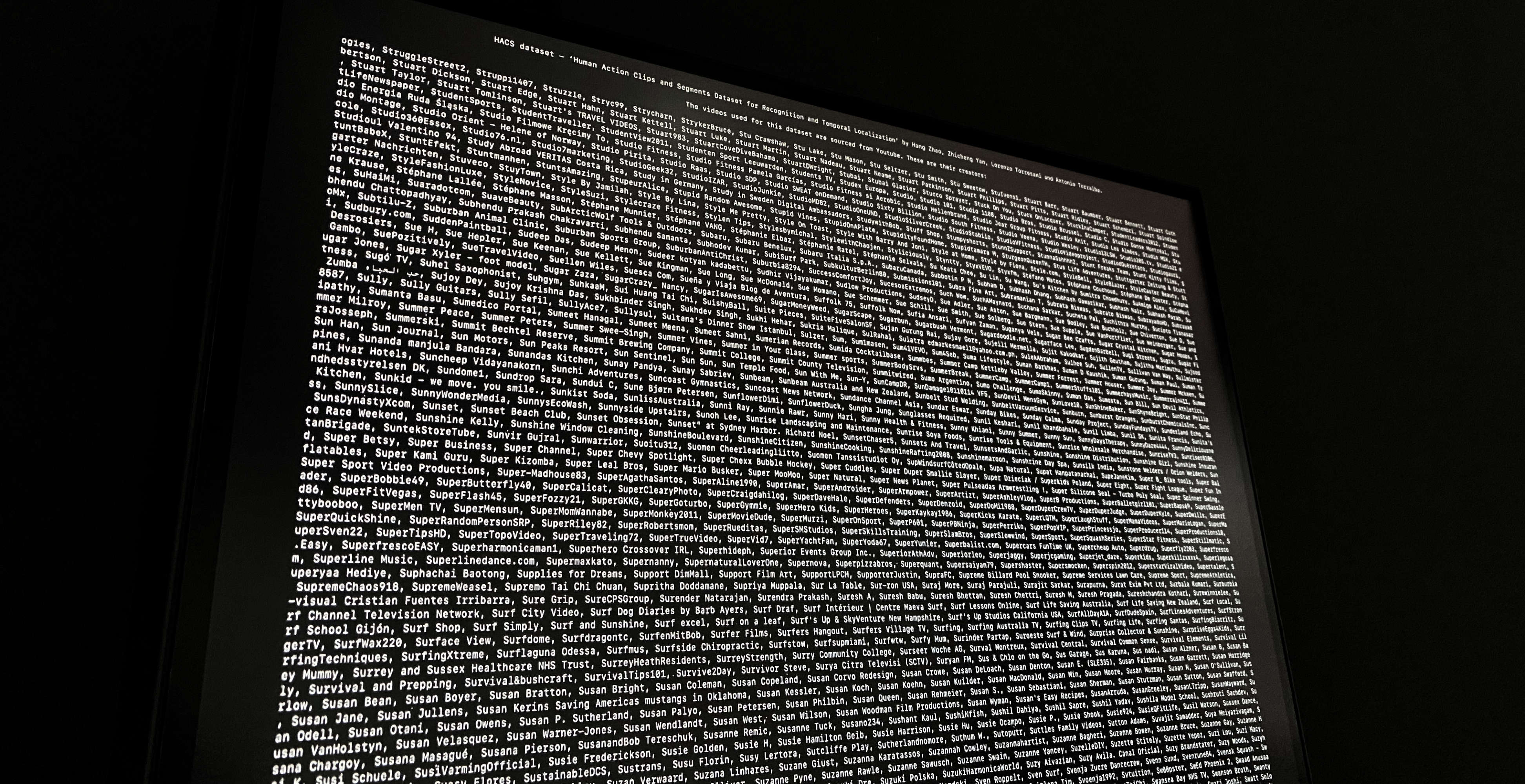

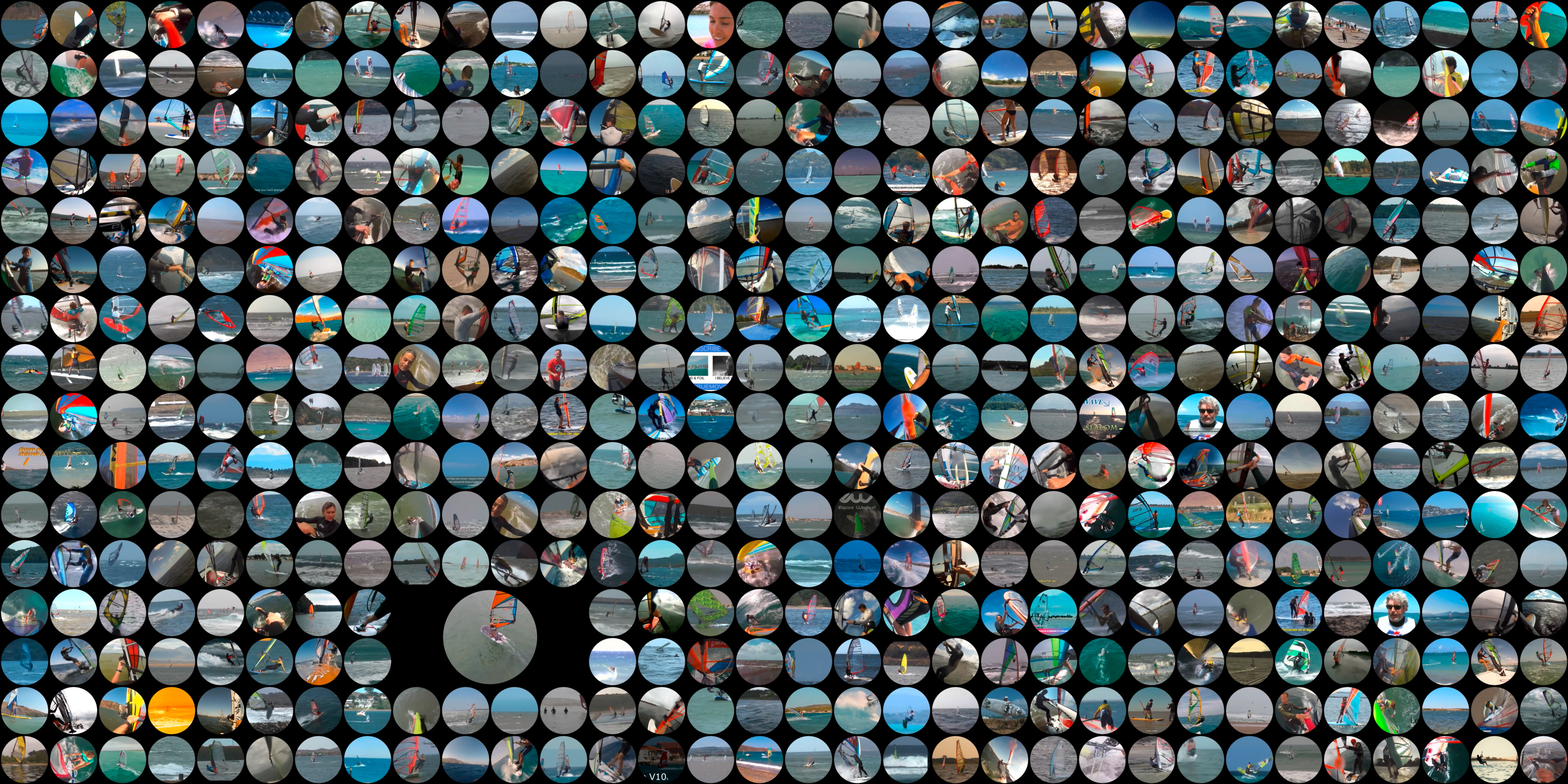

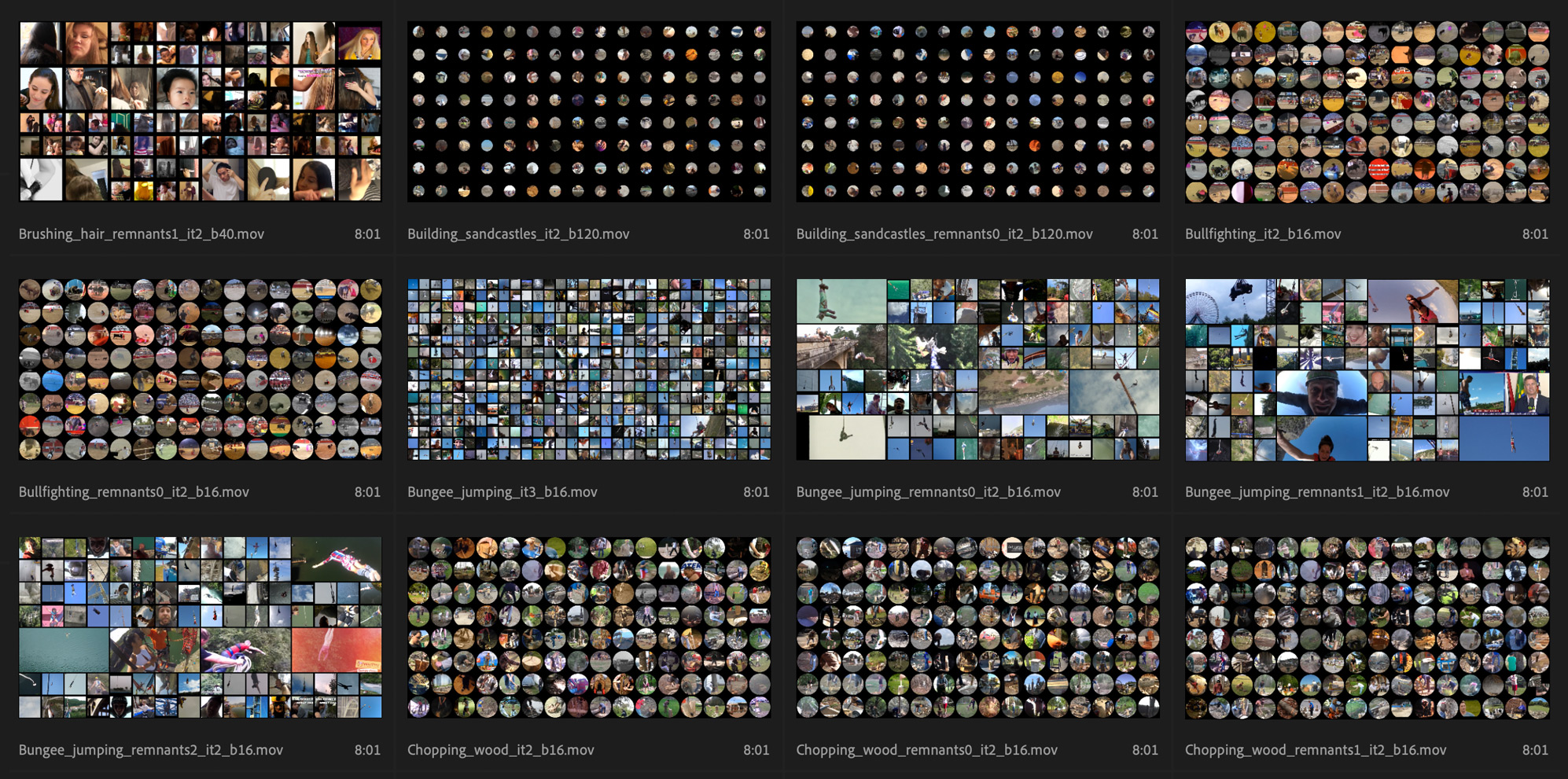

Intelligence is about understanding the world one lives in. For machines to become intelligent, they have to learn about us and our world. Like children, they learn by observing, they 'train' with text, images, videos – vast datasets, coined the 'digital gold' of our time. 2 sekunden daten visualizes one such dataset for machine learning, the 'HACS Human Action Clips and Segments Dataset for Recognition and Temporal Localization' – 1.5 million two-second YouTube clips, compiled for machines to recognize human behaviours. The installation makes this data comprehensible in a non-verbal, intuitive way. It translates from machine to human, letting us understand how this magic works, but also shedding light on the complexity of algorithmic discrimination.

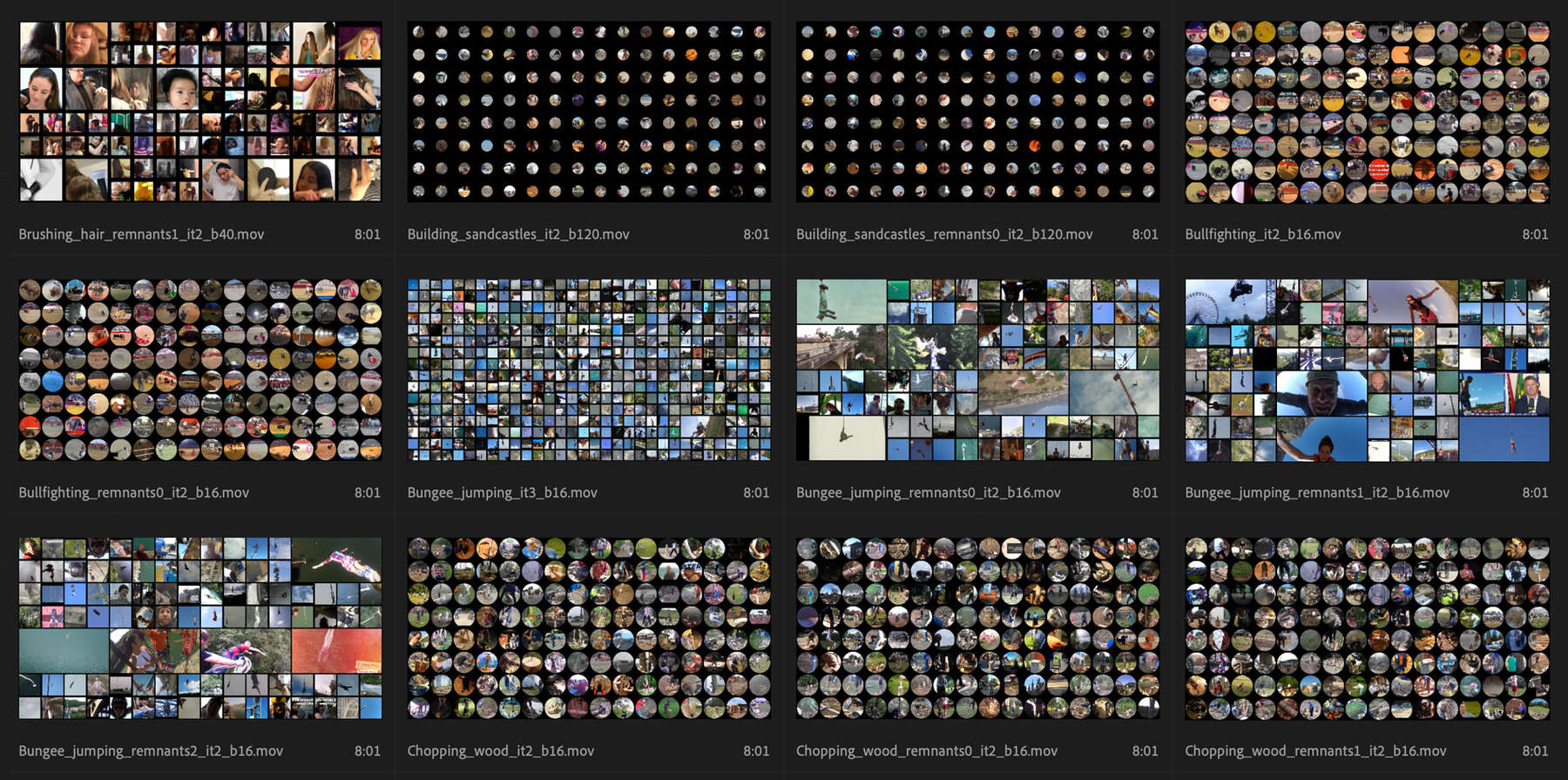

Like when seeing the label 'applying make-up' whose depiction on YouTube (influencers giving make-up tips) is strikingly different from our own daily bathroom exercises. Or, 'brushing teeth' where we can spot, among thousands of humans, a zookeeper brushing the teeth of an iguana, and we may understand that this data, however vast, still only covers a fraction of what would be needed.

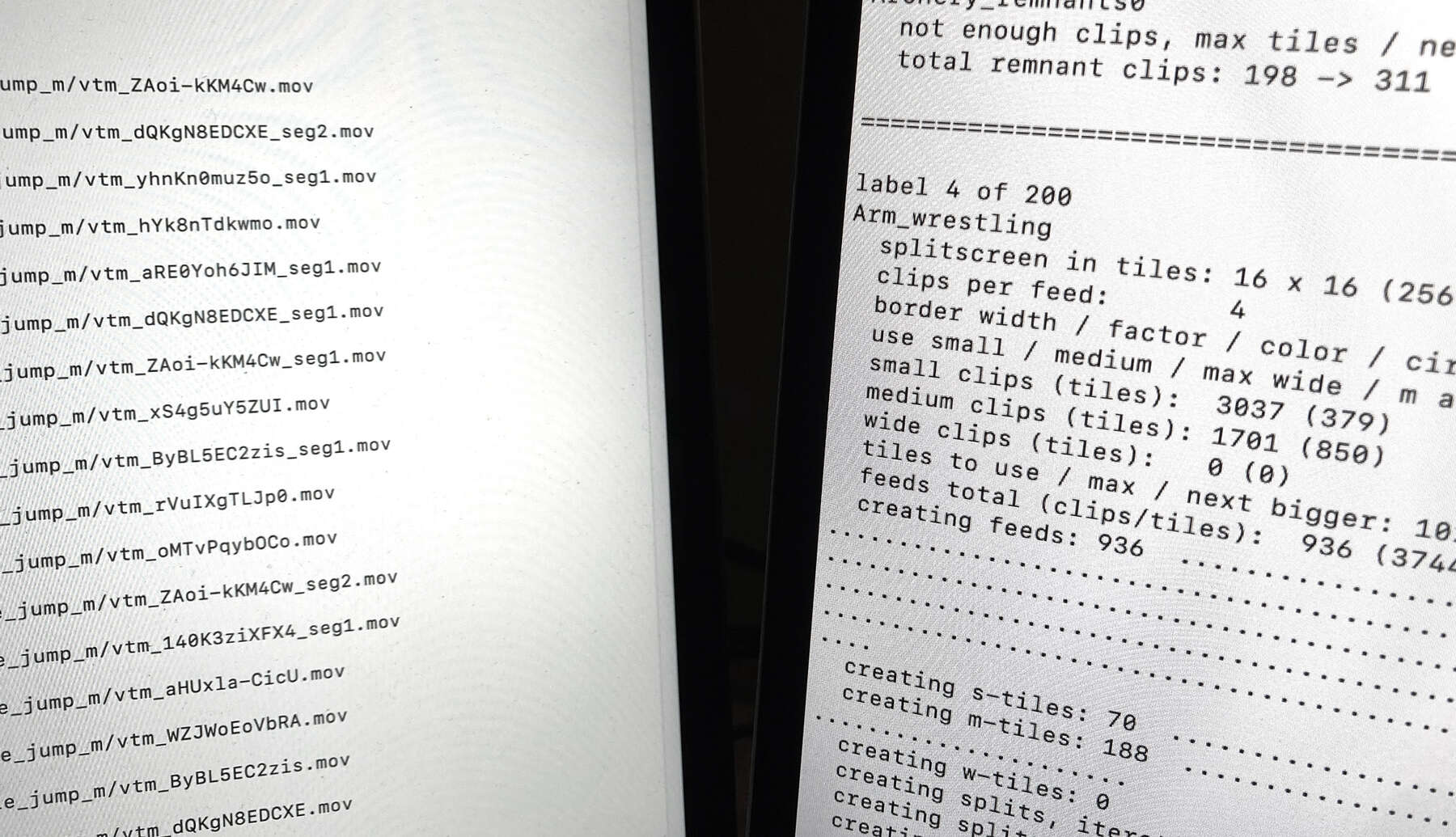

The project was custom coded, mainly using Python to process the dataset and FFmpeg for the video portion.

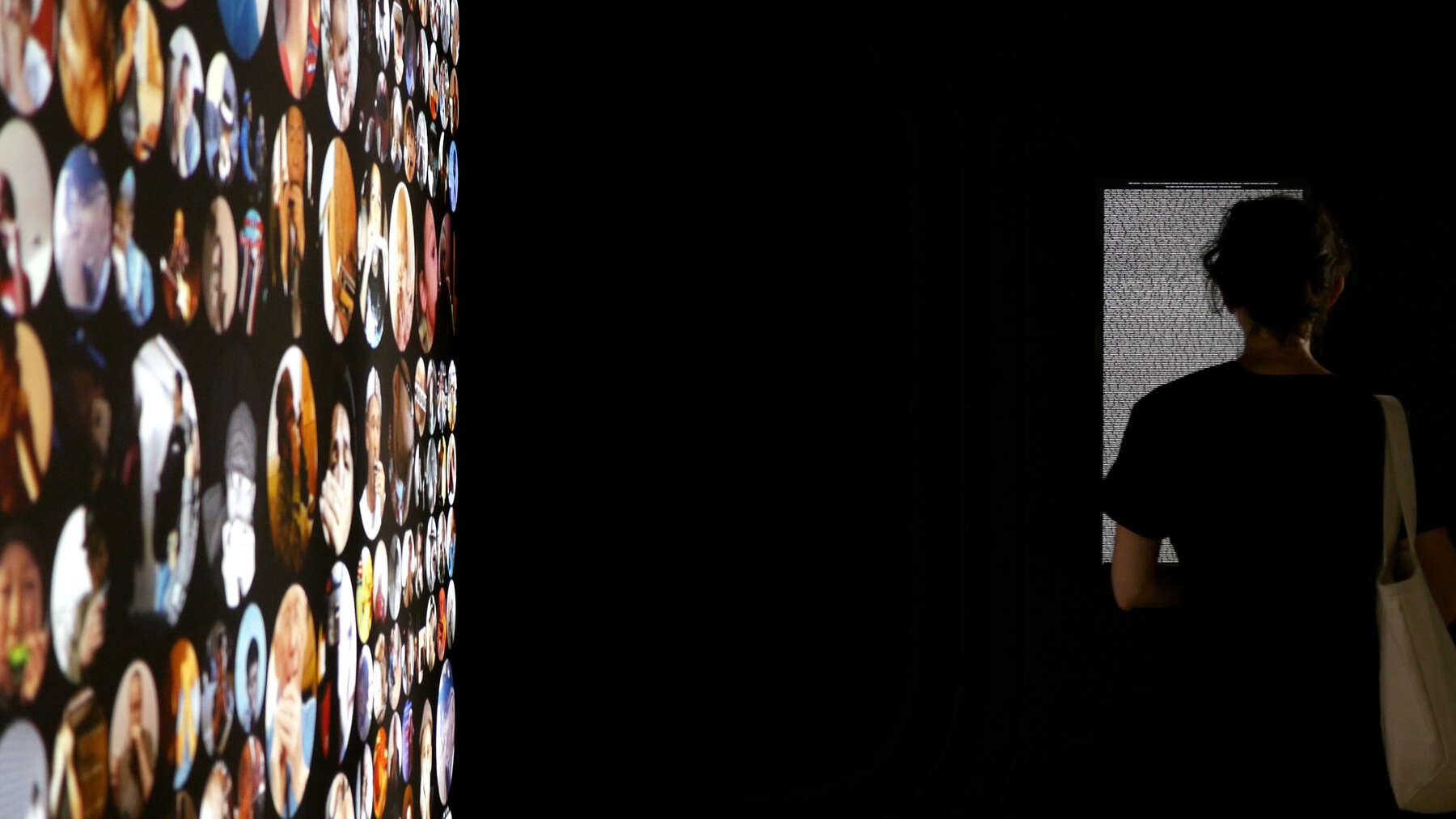

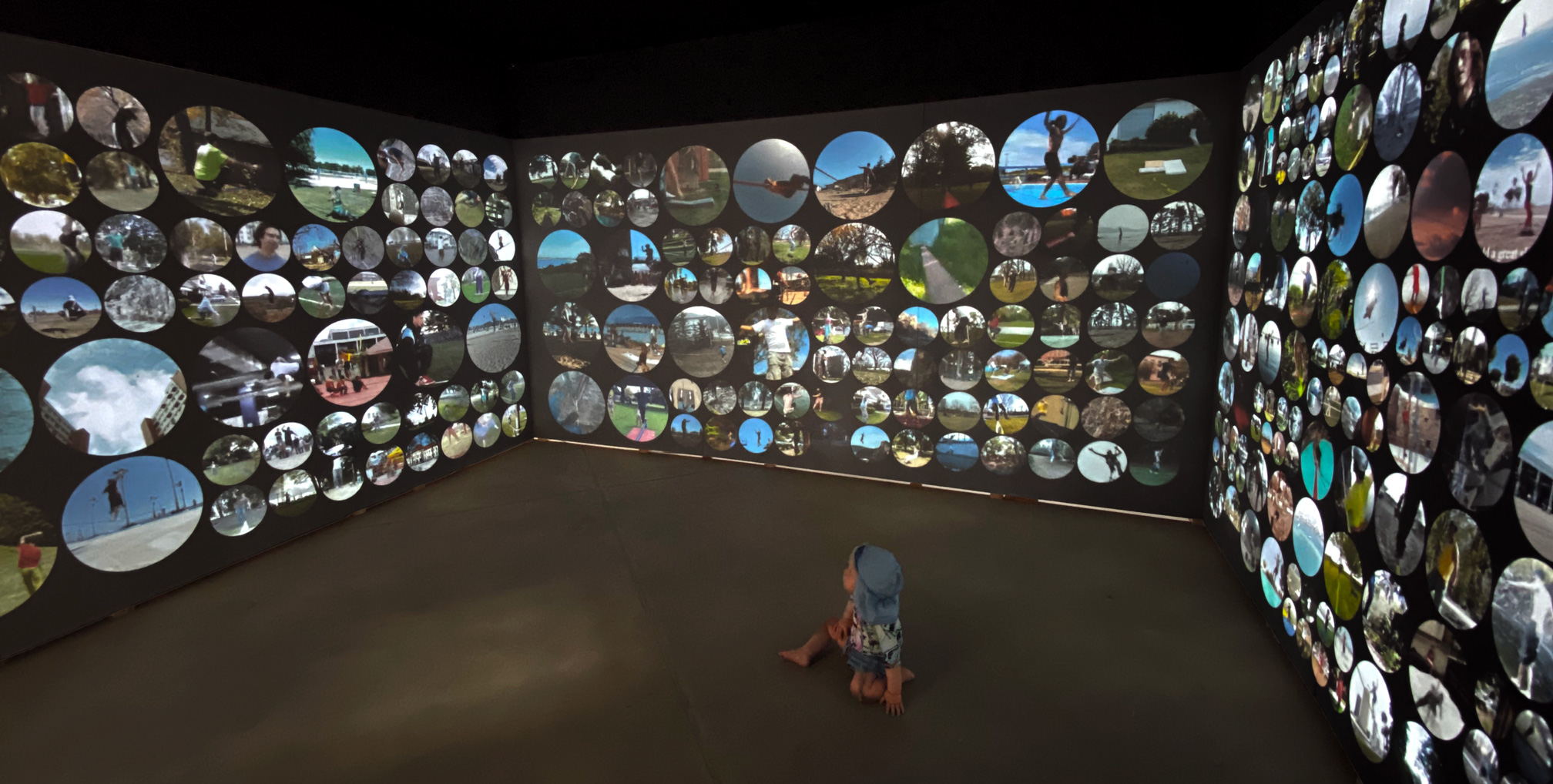

2 sekunden daten had its premiere at the 48h Neukölln Arts Festival 2021 as an immersive installation using three projectors plus a screen.

After its premiere in Berlin, 2 sekunden daten was exhibited in Russia at 48h Novosibirsk (48 часов Новосибирск, a joint venture of the Goethe Institut, ZK19 and 48h Neukölln) on 17.–19.09.2021. As the exhibition was much smaller, it was shown in a minimal set-up, only using two wall-mounted monitors.

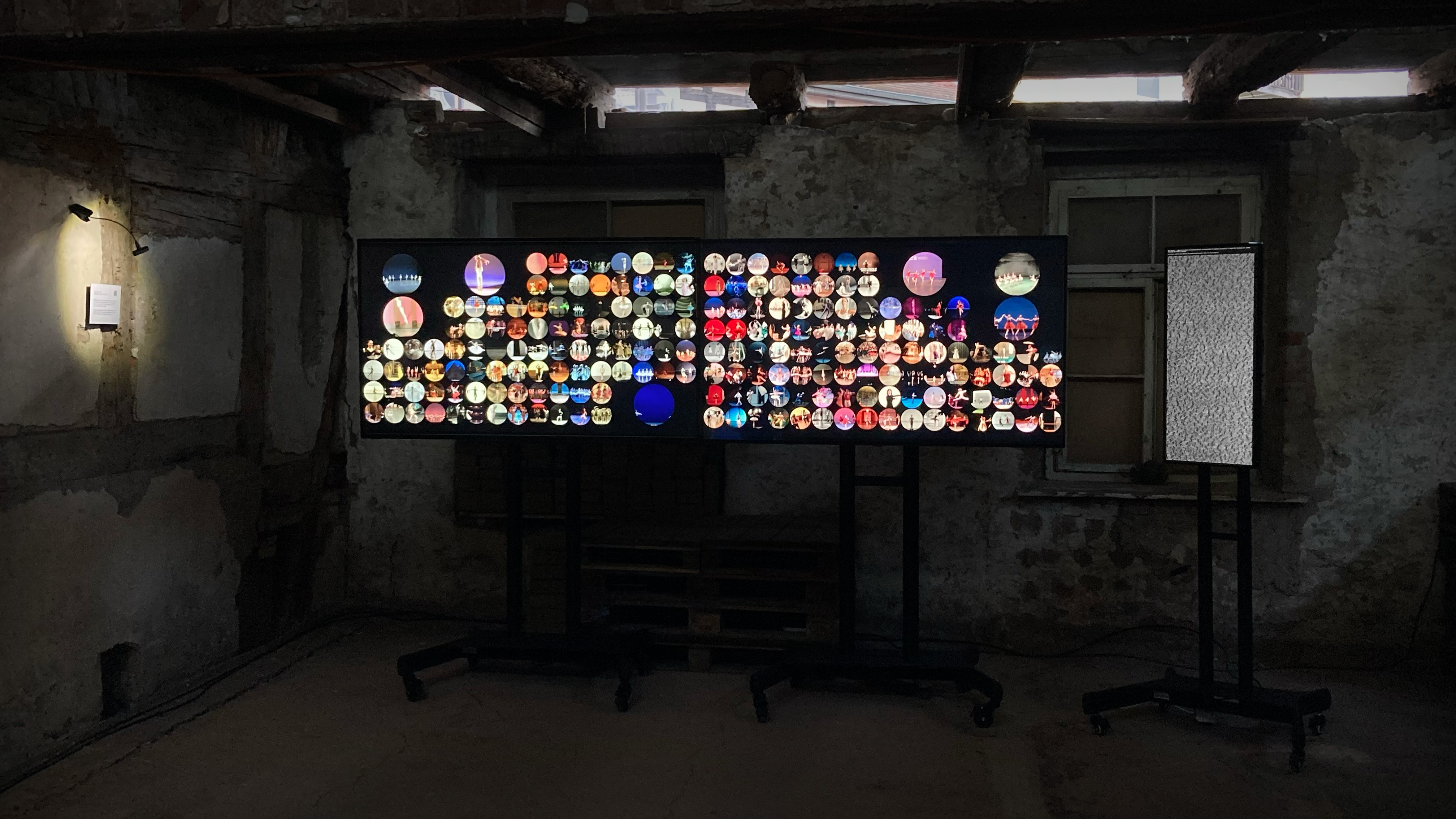

In September 2022, 2 sekunden daten was also on exhibition at the Jahrestagung der Gesellschaft für Medienwissenschaft (the annual convention of the German Society for Media Studies), hosted by the Martin Luther University Halle-Wittenberg. The exhibition space was rather special, an old Brewery from 1718. This time, the set-up was two large 4k-screens plus a smaller one for the credits.

Squares? Circles? Squircles?